Researchers have trained a neural network to listen in to the sounds of coral reefs and help identify what is happening on the reef.

Using acoustics to decipher reef activity is an important part of conservation efforts. However, the process can be very slow. Where the neural network stands out is the speed at which it can process the acoustic signals.

The network can process the data up to 25 times faster than human researchers. Moreover, the neural network has proven to be as accurate as human experts listening to the reefs.

Commenting on the work and the findings, study author Seth McCammon, an assistant scientist of applied ocean physics and engineering at the Woods Hole Oceanographic Institution, said:

“But for the people that are doing that, it’s awful work, to be quite honest. It’s incredibly tedious work. It’s miserable. It takes years to analyze data to that level with humans. The analysis of the data in this way is not useful at scale.”

He added:

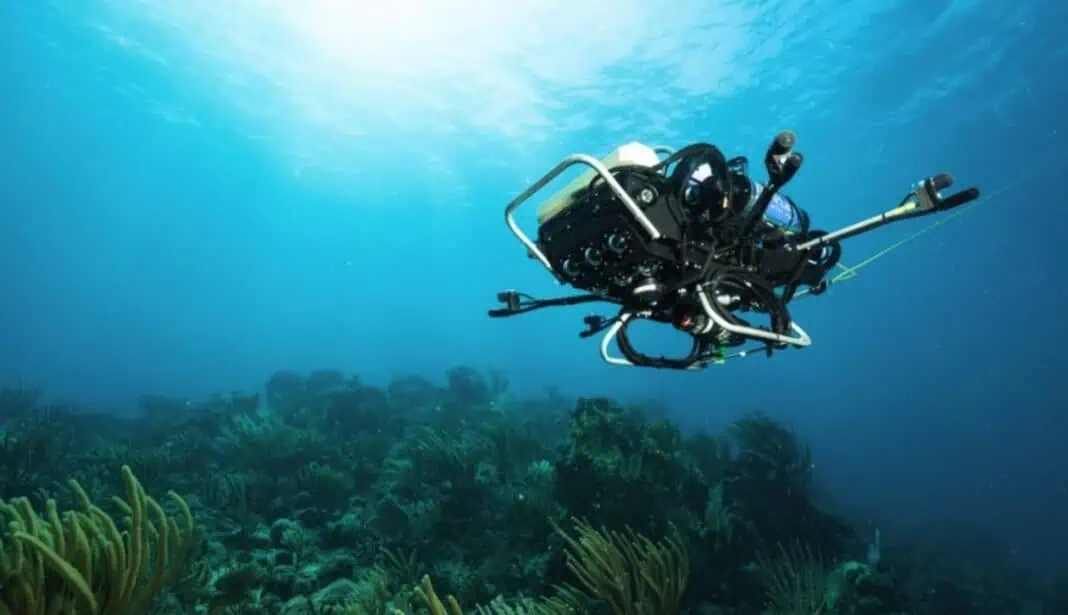

“Now that we no longer need to have a human in the loop, what other sorts of devices — moving beyond just recorders — could we use? Some work that my co-author Aran Mooney is doing involves integrating this type of neural network onto a floating mooring that’s broadcasting real-time updates of fish call counts. We are also working on putting our neural network onto our autonomous underwater vehicle, CUREE, so that it can listen for fish and map out hot spots of biological activity.

“For the vast majority of species, we haven’t gotten to the point yet where we can say with certainty that a call came from a particular species of fish. That’s, at least in my mind, the holy grail we’re looking for. By being able to do fish call detection in real-time, we can start to build devices that are able to automatically hear a call and then see what fish are nearby.”

You can find the original research here.